Since OpenAI released ChatGPT, there has been a lot of speculation about what its killer app will be. And perhaps topping the list is online search. According to The New York Times, Google’s management has declared a “code red” and is scrambling to protect its online search monopoly against the disruption that ChatGPT will bring.

ChatGPT is a wonderful technology, one that has a great chance of redefining the way we create and interact with digital information. It can have many interesting applications, including for online search.

But it might be a bit of a stretch to claim that it will dethrone Google—at least from what we have seen so far. For the moment, large language models (LLM) have many problems that need to be fixed before they can possibly challenge search engines. And even when the technology matures, Google Search might be positioned to gain the most from LLMs.

LLMs and truthfulness

ChatGPT is very good at answering questions. It is almost like you’re talking to a person that has spent hundreds of years absorbing knowledge. Its output is fluid and grammatically correct, and it can even mimic different styles of speech.

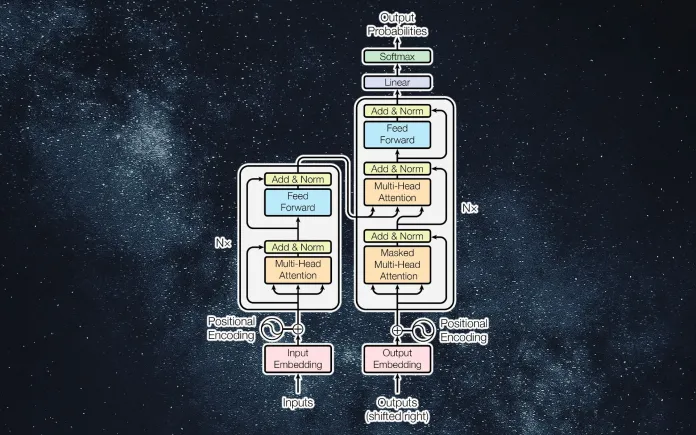

However, the problem is that ChatGPT’s answers are not always correct. In fact, it often hallucinates and states completely wrong facts. Behind the veneer of literacy, ChatGPT is a very advanced autocomplete engine. It takes your prompt (and chat history) and tries to predict what should come next. And it doesn’t get things right, even if its answers mostly look plausible.

Addressing the truthfulness of ChatGPT’s output will be a major challenge. Unfortunately, there is currently no way to tell hallucinations from truths in ChatGPT’s output, unless you verify its answers with some other source (using Google maybe?). But that would be self-defeating if the point is to use the large language model as a replacement for search engines.

Now, not everything that Google or other search engines provide is truthful either. But at least they provide you with links to sources that you can verify. ChatGPT provides plain text with no reference to the actual websites.

One possible solution would be to add a mechanism that links different parts of the LLM’s output to actual web pages (some companies are experimenting with this). But that is a complicated task that probably can’t be solved with a pure deep learning–based approach. It would require access to another source of information, such as a search engine index database (which is one of the reasons classic search engines are not likely to lose their relevance anytime soon).

Updating the models

Another challenge ChatGPT and other LLMs face is updating their knowledge base. Search engines have the tools and software to constantly index new and modified pages. Updating the search engine database is also a very cost-effective operation.

But for large language models, adding new knowledge requires retraining the model. Maybe not every update will require full retraining, but it will nonetheless be much more expensive than adding and modifying records in a search engine database. And it has to be done multiple times per day if it is to stay up to date with the latest news.

Based on GPT 3.5, ChatGPT probably has at least 175 billion parameters. Since there is no single piece of hardware that can fit the model, it has to be broken up and spread across several processors, such as A100 GPUs. Setting up and parallelizing these processors for training and running the models is both a technical and financial challenge.

The operators of the LLM search engine also need mechanisms and tools to determine which web sources are reliable sources of knowledge and should be given priority. Again, we see the traces of search engine components.

Speed challenges

LLMs also have an inference speed problem. Companies like Google have created highly optimized database infrastructure that can find millions of answers in less than a second. LLMs such as ChatGPT take several seconds to compose their responses.

Search engines don’t need to browse their entire dataset for each query. They have indexing, sorting, and search algorithms that can pinpoint the right records at very fast speeds. Therefore, even though the body of online information is growing, search engine speed is not dropping.

LLMs, on the other hand, run information through the entire neural network every time they receive a prompt. Granted, the size of the neural network is not comparable to search engine databases. But the amount of computation is still a lot more than querying indexes. Given the nonlinear nature of deep neural networks, there are limits to how much you can parallelize the inference operation. And as the LLM’s training corpus grows, the model will also have to become larger to generalize well across its knowledge base.

The business model for ChatGPT

But perhaps the biggest challenge of an LLM-based search engine is the business model. Google has built an advertising empire on its search engine.

Google Search is not a perfect business model. You rarely click on the ads that increasingly show up above the fold on the search engine results page. But Google’s share of the online search market is so large that even with very low click-through rates, it makes billions of dollars yearly.

Google also has the power to personalize search results and ads based on the data it collects from users. This makes its business even more efficient and profitable. And let’s not forget that Google has many other products that enhance the digital profiles it creates for its users, including YouTube, Gmail, Chrome, and Android. And its ad network also expands to websites and other mediums.

Basically, Google controls two sides of a market: The content seekers and the advertisers. By controlling the entire market, it has managed to create a self-reinforcing loop in which it collects more data, improves its search results, and serves more relevant ads.

As a potential search engine, ChatGPT doesn’t have a business model yet. And it is very expensive. A back-of-the-envelope estimation shows that with one million users, ChatGPT costs $100,000 per day and around $3 million per month.

Now imagine what happens when people run 8 billion search queries per day. Now, add the costs of regularly training the model and the manual labor needed the fine-tune the model through reinforcement learning with human feedback.

The costs of training and running a large language model like ChatGPT are so prohibitive that making it work will be exclusive to big tech companies that can spend large amounts on the growth of unprofitable products with no definite business model.

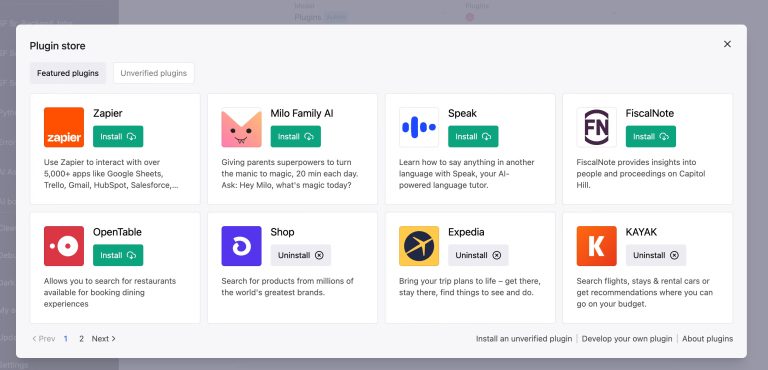

One possible path to profitability would be to deliver the LLM as a paid API like Codex and GPT-3. But that is not the traditional business model of search engines, and I’m not sure how they could make it work. Another path would be to integrate it into Microsoft Bing as some question-answering feature, but that would put it on par with Google Search instead of delivering a different system that disrupts the search market.

Is ChatGPT a search engine?

There has been a lot of talk about ChatGPT becoming the omnipotent assistant that can answer any question, which logically leads to the train of thought that it will replace Google Search.

But while having an AI system that can answer questions is very useful (granted that OpenAI solves its problems), it is not all that online search represents. Google Search is flawed. It shows a lot of useless ads. It also often returns a lot of useless results. But it is an invaluable tool.

Most often, when I turn to Google, I don’t even know what the right question is. I just mash together a bunch of keywords, look at the results, do a bit of research, and then narrow down or modify my search. That is a kind of application that, in my opinion, will not be replaced by a very effective question-answering model yet.

From what it looks, ChatGPT or other similar LLMs will become complementary to online search engines. And they will probably end up strengthening the position of current search giants who have the money, infrastructure, and data to train and run them.

Article Source: https://bdtechtalks.com/2023/01/02/chatgpt-google-search/