Chatbot testers have long envisioned a solution with two buttons: one for identifying all issues within a chatbot and another for resolving them.

Utilizing OpenAI, we have successfully incorporated some impressive features into Botium Box, taking a step towards that dream.

- We implemented OpenAI to predict the subsequent message in a conversation.

- We integrated a new, multilingual paraphraser that minimizes risks while generating alternative responses.

Below, we share some valuable insights and use cases gathered during the development of these features.

Normalize Text:

OpenAI documentation recommends checking for spelling errors in the text. However, we can take it a step further to ensure the text is as precise as possible. Here are a few examples:

- Avoid using line breaks (enters). OpenAI might get confused if a new line is allowed in the “human” section of a chat.

- Terminate sentences with periods. This ensures that OpenAI treats the text as a complete sentence and doesn’t attempt to continue it.

- Eliminate unnecessary information. For instance, it is important to differentiate between a user typing the text “pizza” and pressing the “pizza” button. In the latter case, we might represent it as “#user button:pizza.” However, this extra information could confuse OpenAI, so it is better to use just “#user pizza” instead.

Use Enter Correctly In Prompts

The placement of a line break (enter) at the end of a prompt makes a significant difference in how OpenAI interprets the text. Consider the following examples:

Example 1:

"Human: Hello, who are you?

AI: I am an AI created by OpenAI. How can I help you today?

Human: Can I ask you"Example 2:

"Human: Hello, who are you?

AI: I am an AI created by OpenAI. How can I help you today?

Human: Can I ask you"

In the first example, OpenAI interprets the input as a continuation of the human message, while in the second example, it treats the input as a prompt to generate a new AI message. The distinction lies in the extra line break at the end of the prompt.

Creating a Multilingual Translator:

Developing a multilingual translator is relatively straightforward. You can find a sample translator in the OpenAI playground, which you can adapt to handle multiple languages. Here’s an example using a multilingual dataset:

English: See you later!

French: À tout à l'heure!

German: Ich möchte Geld überweisen.

English: I want to transfer money.

Russian: Я хотел бы заказать пиццу.

This approach works well, but it requires explicitly defining the source text’s language. In the following step, we will address this limitation.

Multilingual Translator Without Specifying the Source Language:

To create a multilingual translator without specifying the source language, you can restructure the prompt as follows:

Text: See you later!

LanguageOfResult: French

Result: À tout à l'heure!

Text: Ich möchte Geld überweisen.

LanguageOfResult: English

Result: I want to transfer money.

Text: Я хотел бы заказать пиццу.

LanguageOfResult: German

Result:

By reorganizing the prompt in this manner, you no longer need to provide the source language. However, to further optimize the translator, it’s recommended to experiment with additional tweaks and settings.

Multilingual Translator with Language Detection:

By adding a new field, you can prompt OpenAI to detect the language of the input text:

Text: See you later!

LanguageOfResult: French

Result: À tout à l'heure!

LanguageOfText: English

Text: Ich möchte Geld überweisen.

LanguageOfResult: English

Result: I want to transfer money.

LanguageOfText: German

Text: Я хотел бы заказать пиццу.

LanguageOfResult: German

While this experiment is interesting, the language detection is not always accurate. However, it does reveal some key insights:

- OpenAI can learn to associate specific input parameters (Text, LanguageOfResult) with corresponding output values (Result, LanguageOfText). Though these are not technically parameters and return values for OpenAI, they serve as such for us.

- OpenAI is capable of handling more complex tasks. What may seem like a two-step solution for us could be a single, seamless task for OpenAI.

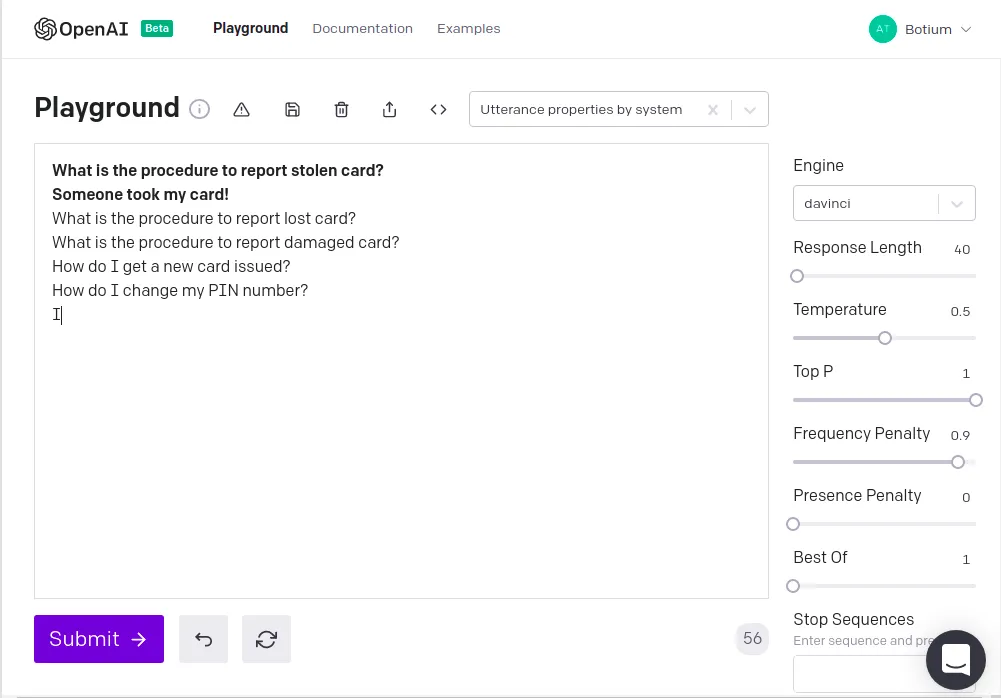

Paraphraser:

There are numerous approaches to creating a prompt for a paraphraser, and each has its advantages and disadvantages. Our paraphraser is simple yet effective in meeting our requirements.

Pros:

- Flexibility: It generates creative and diverse alternatives.

- Efficiency: The prompt only requires two lines, reducing costs.

- Language-agnostic: The paraphraser isn’t limited to any specific language. Some prompts have a training section and a request section, which can lead to confusion if the prompt contains multiple languages.

Cons:

- Quality: Approximately half of the generated paraphrases may not be ideal. However, this doesn’t mean they’re worthless! For example, if we receive “Can I replace my card if it is lost or stolen?” for “Someone took my card!” and “What is the procedure to report a stolen card?”, we can see that it doesn’t fit. But in the context of a banking chatbot, these paraphrases could suggest new use cases to explore.

In conclusion, leveraging OpenAI’s capabilities can lead to the development of powerful tools such as multilingual translators, paraphrasers, and language detectors. While each approach may have its pros and cons, experimenting with various prompts and structures can help optimize the results. As we continue to explore and refine these techniques, we can unlock the full potential of OpenAI and create more advanced and effective solutions for a wide range of applications.